Generative AI Testing: Revolutionizing Software Quality Assurance

Artificial intelligence (AI) technology has developed so rapidly and generative AI emerged as a groundbreaking technology to reshape software testing operations as well as various other sectors.

AI-driven models within generative testing frameworks automate test case creation along with complex testing processes and boost the development lifecycle for software. The integration of generative AI into testing helps companies reach more precise results and operate with increased speed and wider test coverage as they build reliable and solid applications.

In this blog post, I will analyze how generative AI testing is revolutionizing software quality assurance and top AI testing tools.

Let’s start with understanding generative AI testing!

What is Generative AI Testing?

Generative AI testing involves creating automated test cases, scripts, and datasets through deep learning and natural language processing (NLP)-powered AI models. The self-learning capabilities of generative AI set it apart from standard automated testing since it deviates from manual script development and maintenance through its ability to derive intelligent test scenarios from existing code and user behavior and historical data records.

Some key components of generative AI testing include:

- AI-Powered Test Case Generation: Using AI algorithms to generate meaningful test cases based on software requirements and historical defects.

- Self-Learning Test Automation: Continuous learning and adaptation of test cases based on real-time application updates and usage patterns.

- Test Data Synthesis: Creating synthetic data for testing purposes while ensuring privacy compliance and data diversity.

- Autonomous Bug Detection: Identifying and categorizing software defects without human intervention.

How Generative AI Enhances Software Testing

Generative AI testing introduces multiple advantages that improve the efficiency and effectiveness of software quality assurance.

1. Automated Test Case Generation

Traditional test case design can be labor-intensive and time-consuming. Generative AI automates this process by analyzing source code, requirements, and logs to generate high-quality test cases. AI models can anticipate edge cases, improving test coverage and reducing human effort.

2. Intelligent Test Maintenance

One of the biggest challenges in test automation is maintaining test scripts when software undergoes frequent changes. Generative AI can adapt test cases dynamically, reducing script maintenance overhead and ensuring that tests remain relevant as the application evolves.

3. Faster Test Execution

AI-powered test automation enables rapid test execution by leveraging predictive analytics and prioritizing critical test cases. This helps identify high-impact defects early in the development cycle, significantly reducing the time spent on debugging and rework.

4. Enhanced Test Coverage

Generative AI can analyze large datasets and user interactions to uncover test scenarios that human testers may overlook. By simulating diverse usage patterns, AI ensures comprehensive testing of applications across different environments, devices, and user behaviors.

5. Improved Software Reliability

AI-driven testing enhances software reliability by continuously learning from past test results and defect patterns. It proactively identifies potential issues before they impact end-users, resulting in higher customer satisfaction and fewer post-production failures.

Generative AI Testing Tools Overview

Several AI-driven testing tools are available to help organizations implement generative AI testing effectively. Here’s an overview of three leading AI testing tools:

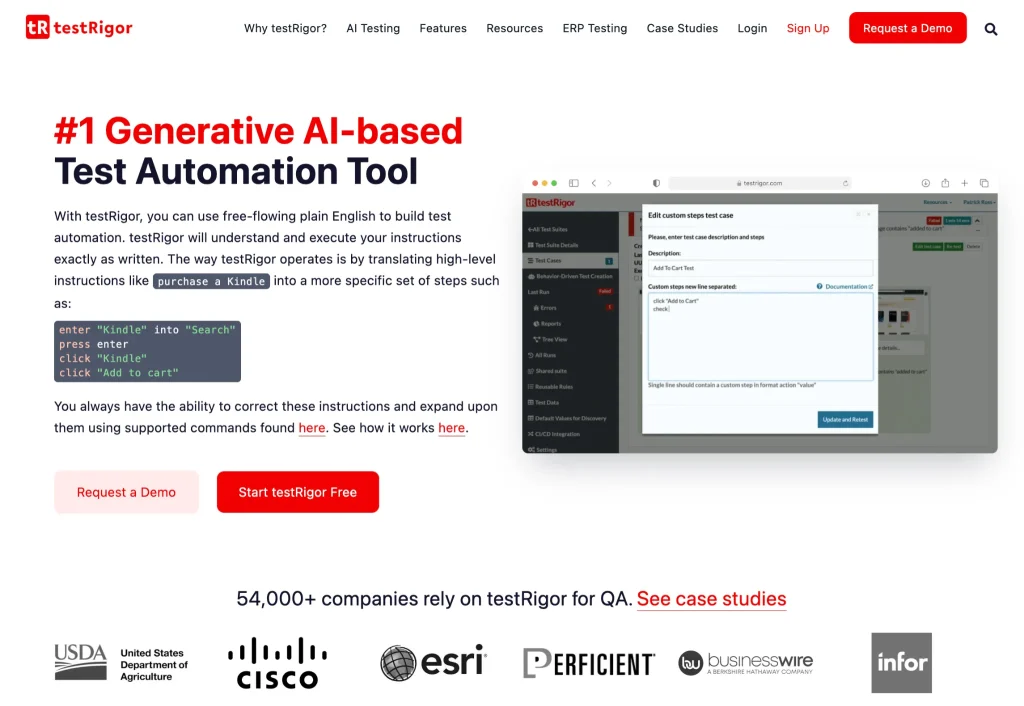

1. testRigor

testRigor is the #1 generative AI-based codeless test automation tool that helps teams spend 99.5% less time on test maintenance. Unlike traditional tools, it allows users to write and generate end-to-end tests in plain English – so even non-engineers can contribute!

Key Features:

- Quick and simple test creation with Generative AI

- Single tool for web, mobile (hybrid/native), desktop, and API testing

- 15X faster test creation compared to Selenium

- Near-zero maintenance – no locators needed

- Ultra-stable tests without CSS/XPath reliance

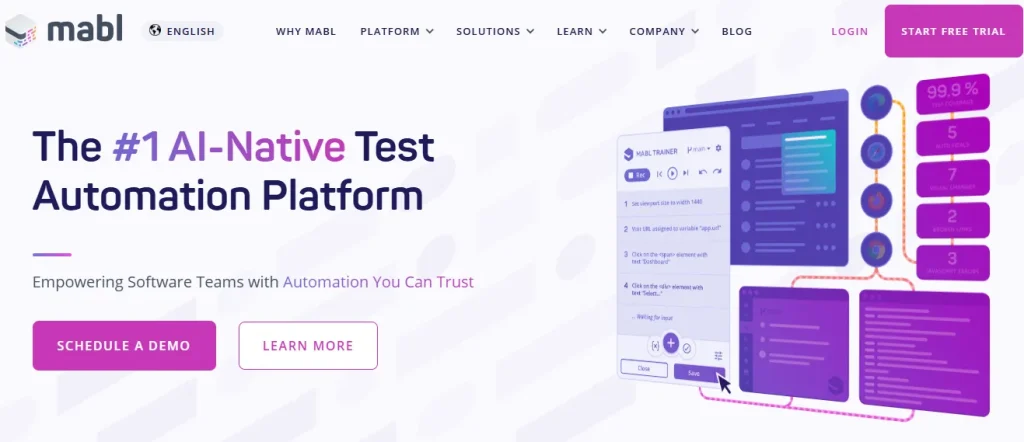

2. Mabl

Mabl is an intelligent test automation platform that integrates AI and machine learning to optimize end-to-end testing. It provides self-maintaining tests that adapt to application changes, improving test reliability.

Key Features:

- Intelligent test automation with self-healing capabilities

- Built-in visual testing and regression analysis

- Seamless integration with DevOps workflows

- Cloud-based execution with detailed analytics

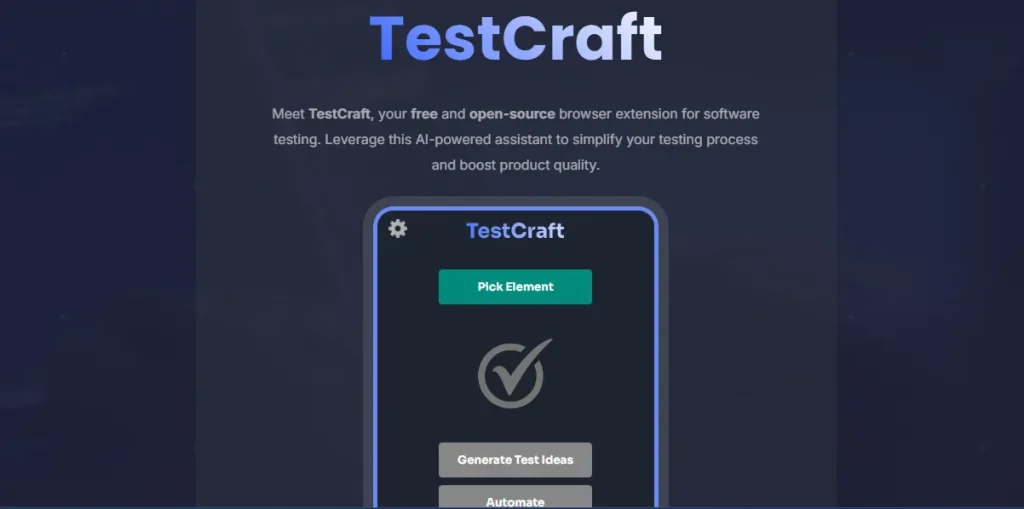

3. TestCraft

TestCraft is a codeless AI-powered test automation tool that enables testers to create automated tests using a drag-and-drop interface. It leverages machine learning to detect application changes and adjust tests accordingly.

Key Features:

- Codeless test creation with a visual editor

- AI-based test maintenance and self-healing

- Continuous testing with real-time feedback

- Integration with popular CI/CD and DevOps tools

Challenges of Generative AI Testing

Despite its advantages, generative AI testing presents several challenges that organizations must address for successful implementation.

1. Data Privacy and Security

Generative AI relies heavily on data to create meaningful test cases. Ensuring that sensitive user data is anonymized and protected is critical to preventing security breaches and regulatory violations.

2. AI Model Bias

AI models can inherit biases from training data, leading to biased test scenarios that may not adequately represent real-world usage. Continuous model refinement and diverse training datasets are necessary to minimize bias.

3. Complexity of AI Integration

Integrating generative AI into existing test automation frameworks can be complex. Organizations must invest in AI expertise and tools that seamlessly integrate with their current testing infrastructure.

4. Interpretability of AI Decisions

Understanding how AI (artificial intelligence) generates test cases and makes testing decisions can be challenging. Testers must establish mechanisms to interpret AI-driven test results and validate their accuracy.

5. Dependence on High-Quality Training Data

The effectiveness of generative AI testing depends on the quality and diversity of training data. Poor or insufficient data can lead to unreliable test case generation and missed defects.

Future Trends in Generative AI Testing

As AI technology continues to evolve, generative AI testing is expected to become more sophisticated and widespread. Some emerging trends include:

1. AI-Augmented Exploratory Testing

Generative AI will assist human testers in exploratory testing by suggesting test scenarios based on real-time analysis of user behavior and system responses.

2. Self-Healing Test Automation

AI-driven test automation frameworks can self-heal, automatically updating test scripts and adapting to application changes without manual intervention.

3. AI-Powered Security Testing

Generative AI will play a significant role in security testing by detecting vulnerabilities, generating penetration test cases, and simulating cyberattacks to strengthen software defenses.

4. Continuous Testing in DevOps

AI-driven continuous testing will be deeply integrated into DevOps pipelines, enabling real-time feedback and reducing release cycles.

5. AI-Assisted Test Management

AI will assist test managers in optimizing test strategies, prioritizing test cases based on risk analysis, and providing actionable insights through predictive analytics.

Conclusion

Current software quality assurance methods use generative AI for automated test case development alongside broader test coverage and improved software reliability. The future of AI-driven testing appears promising but businesses need to overcome problems with AI bias and data security along with integration difficulties.

The implementation of generative AI in testing operations allows companies to construct superior software more quickly and more efficiently which results in industry leadership. Increased AI technology capabilities will expand generative AI roles in software testing which will create innovative quality assurance techniques and transform the development and maintenance of software.